Finance is a closely regulated business, so explainable AI is critical for holding AI models accountable. Artificial intelligence is used to assist assign credit score scores, assess insurance coverage claims, enhance Explainable AI investment portfolios and far more. If the algorithms used to make these instruments are biased, and that bias seeps into the output, that can have severe implications on a person and, by extension, the corporate. An AI system ought to have the flexibility to clarify its output and supply supporting proof. SBRLs assist clarify a model’s predictions by combining pre-mined frequent patterns into a choice list generated by a Bayesian statistics algorithm. This record consists of “if-then” guidelines, where the antecedents are mined from the info set and the algorithm and their order are learned.

Explainable Ai: What Is It? How Does It Work? And What Role Does Knowledge Play?

CEM helps understand why a model made a particular prediction for a specific occasion, providing insights into positive and unfavorable contributing components. It focuses on providing detailed explanations at a neighborhood stage rather than globally. Explainable AI is a set of processes and strategies that enable users to understand and trust the results and output created by AI/ML algorithms.

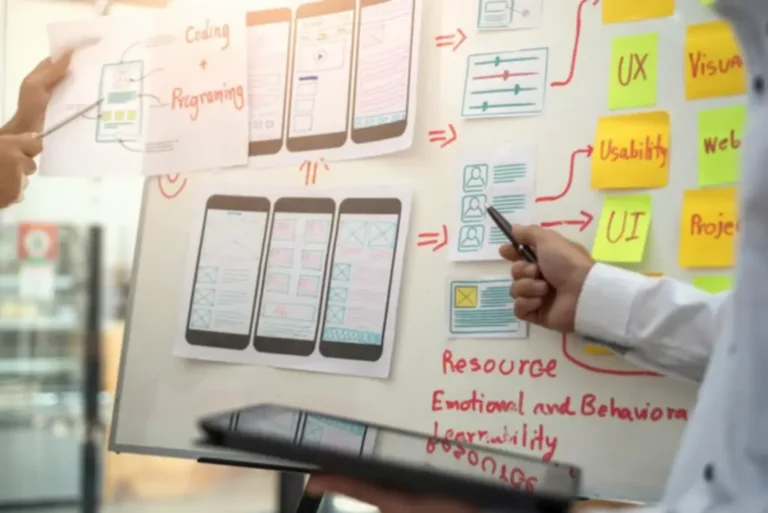

Development Phases: Building Reliable Models

State-of-the-art contributions to artificial intelligence in emergency medication equally cover diagnostics and triage instances. Dive deep with main consultants and thought leaders on all the topics that matter most to your small business, from AI to community safety to driving rapid, related transformation for your business. As AI turns into more and more prevalent, it is extra necessary than ever to disclose how bias and the query of belief are being addressed. Learn about her expertise with implementing Conversational AI applications in her firm and shopper methods. ChatGPT is a non-explainable AI, and when you ask questions like “The most essential EU directives related to ESG”, you will get fully incorrect solutions, even if they look like they’re correct. ChatGPT is a good example of how non-referenceable and non-explainable AI contributes significantly to exacerbating the problem of data overload instead of mitigating it.

What Is Explainable Ai, Or Xai?

- Without having correct insight into how the AI is making its decisions, it may be difficult to observe, detect and handle these sorts of issues.

- Human-agent interaction may be outlined as the intersection of synthetic intelligence, social science, and human–computer interplay (HCI); see Fig.

- Explainable AI can generate evidence packages that assist model outputs, making it easier for regulators to examine and verify the compliance of AI methods.

- They present insights into the behavior of the AI black-box model by deciphering the surrogate mannequin.

Use a credit score threat sample mannequin to pick deployment and set the data sort for payload logging. Discover insights on how to construct governance systems able to monitoring ethical AI. The code then trains a random forest classifier on the iris dataset utilizing the RandomForestClassifier class from the sklearn.ensemble module.

In the case of the iris dataset, the LIME rationalization shows the contribution of every of the options (sepal size, sepal width, petal length, and petal width) to the anticipated class (setosa, Versicolor, or Virginia) of the instance. A new strategy for finding “prototypes” in an present machine learning program. A prototype could be thought of as a subset of the information that have a greater affect on the predictive energy of the model.

As techniques turn into more and more sophisticated, the problem of constructing AI decisions transparent and interpretable grows proportionally. Peters, Procaccia, Psomas and Zhou[101] present an algorithm for explaining the outcomes of the Borda rule utilizing O(m2) explanations, and show that that is tight in the worst case. Social selection concept aims at finding solutions to social decision problems, which might be primarily based on well-established axioms.

It has many use instances including understanding function importances, identifying information skew, and debugging mannequin performance. Local interpretable model-agnostic explanations (LIME) is a method that matches a surrogate glass-box mannequin across the determination space of any black-box model’s prediction. LIME works by perturbing any individual data level and generating synthetic information which gets evaluated by the black-box system and in the end used as a training set for the glass-box mannequin. Five years from now, there might be new tools and strategies for understanding complicated AI fashions, even as these models continue to develop and evolve.

This synergy is crucial for advancing AI know-how in a means that’s revolutionary, trustworthy, and in preserving with human values and moral norms. As these domains expand, their integration will become a key level on the path to accountable and complicated AI systems. With AI services being built-in into fields similar to well being IT or mortgage lending, it is essential to make sure that the decisions made by AI systems are sound and trustable.

One of the important thing benefits of SHAP is its model neutrality, permitting it to be utilized to any machine-learning mannequin. It also produces constant explanations and handles complicated model behaviors like function interactions. Artificial intelligence (AI) has large potential to improve the health and well-being of individuals, but adoption in clinical practice remains to be limited.

Simplify the process of mannequin analysis whereas increasing model transparency and traceability. Overall, these future developments and tendencies in explainable AI are likely to have important implications and functions in different domains and applications. These developments could present new opportunities and challenges for explainable AI, and could form the future of this technology. Overall, these examples and case research show the potential advantages and challenges of explainable AI and may provide priceless insights into the potential functions and implications of this approach. Mutual informationSLEs are a key software that characterize how your customers expertise network service, whether related wirelessly, wired, and even out of website over the WAN.

The other three rules revolve across the qualities of these explanations, emphasizing correctness, informativeness, and intelligibility. These principles form the foundation for reaching meaningful and accurate explanations, which may range in execution based mostly on the system and its context. When embarking on an AI/ML project, it is important to contemplate whether or not interpretability is required. Model explainability could be applied in any AI/ML use case, but if a detailed degree of transparency is critical, the number of AI/ML strategies becomes more limited. RETAIN model is a predictive model designed to research Electronic Health Records (EHR) data.

Explainability approaches in AI are broadly categorized into world and native approaches. By addressing these five causes, ML explainability by way of XAI fosters better governance, collaboration, and decision-making, in the end leading to improved enterprise outcomes. They relate to informed decision-making, lowered risk, elevated AI confidence and adoption, better governance, extra rapid system improvement, and the overall evolution and utility of AI on the planet. Data networking, with its well-defined protocols and knowledge constructions, means AI can make unimaginable headway without worry of discrimination or human bias. When tasked with impartial downside areas such as troubleshooting and repair assurance, functions of AI could be well-bounded and responsibly embraced. This engagement also types a virtuous cycle that can additional train and hone AI/ML algorithms for steady system enhancements.

Each approach has its own strengths and limitations and can be useful in several contexts and situations. Explainable AI can mean a couple of different things, so defining the term itself is difficult. To some, it’s a design methodology — a foundational pillar of the AI mannequin growth course of. Explainable AI is also the name of a set of options or capabilities anticipated from an AI-based answer, corresponding to determination bushes and dashboard elements. The time period also can level to a method of utilizing an AI software that upholds the tenets of AI transparency. While all of these are legitimate examples of explainable AI, its most necessary role is to advertise AI interpretability across a range of functions.

Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/ — be successful, be the first!

10 Better Real crazy cows casino cash Online casinos & Casino games Late 2024

Joker 8000 Slot Free Trial & Games Comment Late jurassic world slot machine real money 2024

Triple diese Seite anklicken Triple Chance Für nüsse vortragen ohne Registration

Категорії новин

Категорії товарів

News categories

Product Categories

(095) 222 04 06 - служба доставки 1100 – 2200

Замовлення приймається до 2:30